Solix Technologies Recognized In The Forrester Wave™: Enterprise Data Fabric, Q1 2024 Learn More

Cloud Data Management Essentials You Need to Know

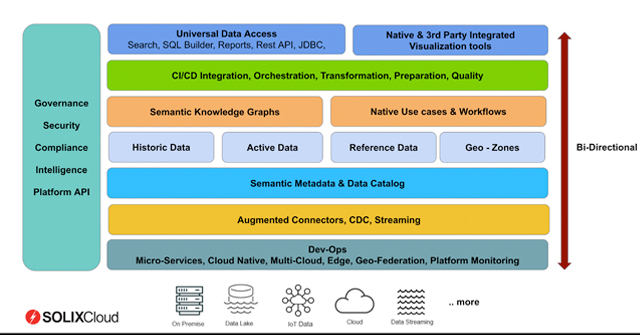

Digital transformation is driving organizations as fast as they can to implement data-driven solutions that transform business results such as advanced analytics, artificial intelligence and machine learning. But achieving digital transformation isn’t so easy for many organizations because a new information architecture is required called the data fabric. The data fabric is a trusted, multi-cloud information architecture that automates data management functions end to end.

New infrastructure requirements are required to support the data fabric. Real-time, event-driven processing across multi-cloud environments is the goal, and the data fabric relies on cloud native, W3C standard software for end-to-end integration of microservices, containerization and orchestration. Not only is the data fabric based on open standards, the design also scales horizontally across virtualized, commodity infrastructure components. Large-scale data consumption can drive up data storage requirements, but object storage served in S3 buckets not only provides acceptable performance for most data-driven workloads, but it also makes an ideal solution for low-cost, bulk data storage.

Experience SOLIXCloud today with a 30-day free trial (No credit card required)

Key Capabilities

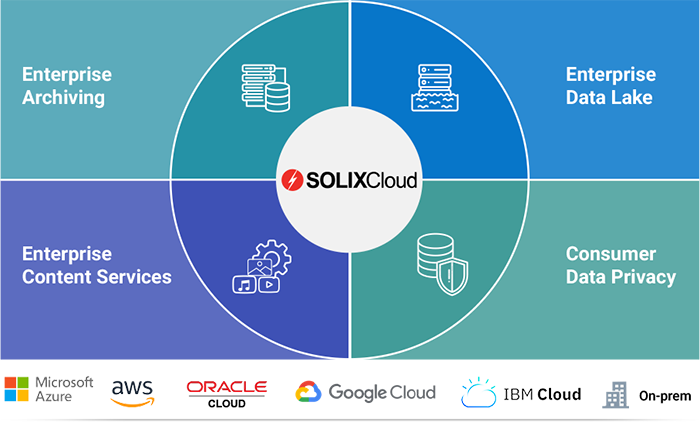

Cloud data management is an application framework that delivers four essential services to the data fabric.

-

Connect

-

Catalog

-

Govern

-

Discover

-

Connect

The data-driven enterprise relies on data pipelines to feed downstream applications, and cloud data management systems are responsible for collecting and moving data from any source to any target. Source data may be streaming or reside as files located anywhere, and very often, data is sourced from enterprise applications, mainframes, factory floors and other SaaS systems. The ability to connect to any data source and not only move large volumes of data but also transform and prepare the data for use is a core competency for the data-driven enterprise.

-

Catalog

One benefit of a centralized data repository is an improved ability to explore your data. Like an inventory control system, data catalogs help you understand your data with indexes and descriptions, metadata, lineages and business glossaries to create improved context about your data.

-

Govern

With so much data under management, proper data governance is essential to ensure data security, compliance and privacy. Information lifecycle management (ILM) classifies and manages data based on policies and business rules throughout the entire data lifecycle, from collection and ingestion to data retention and, ultimately, to shredding.

-

Discover

In order to explore the entire data landscape, data access must be available not only through API but through text search, ad hoc queries and structured reports. Role-based access controls granting the “least privilege necessary” ensure universal access to the data without compromising data privacy, security and compliance.

The Cloud Data Management

Cloud data management runs either as a private cloud solution managed by in-house resources or as a SaaS subscription. Once data is captured and ingested into a common data platform, cloud data management applications provide tooling to pipeline, enrich, transform and prepare the data for later use. IT managers gain increased control because common data platforms enable security, data governance and access control to be managed from a single point. The top three cloud data management applications for the data fabric are data lakes, archives and consumer data privacy.

Top three cloud data management applications for the data fabric

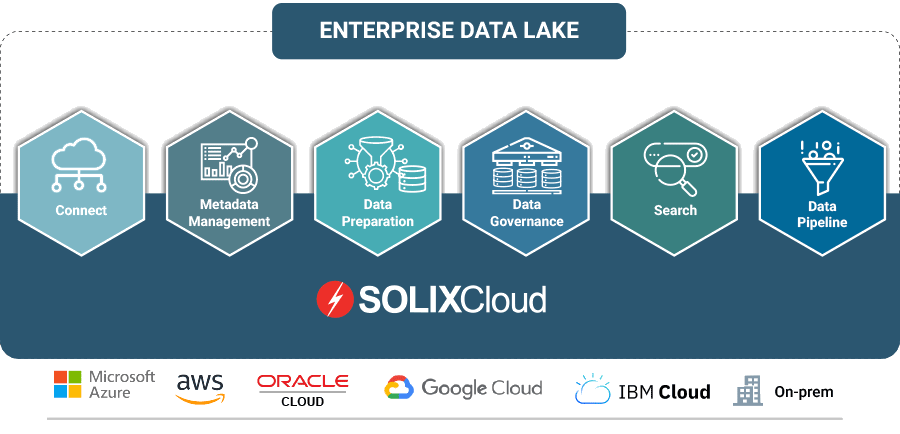

Data Lakes

Data lakes are repositories of “current” data collected to feed data-driven applications. Advanced analytics, machine learning and artificial intelligence applications often require some data transformation, data cleansing or data enrichment in preparation for processing. Data lakes are the building blocks of data pipelines, and they provide a data preparation platform to make data “fit for use” by data-driven applications.

Archives

Archives are centralized repositories of “historical” data that improve data governance and compliance through the principle of data minimization, which states simply that data should be retained only for as long as minimally necessary, and only to fulfill a specific and defined purpose. By archiving historical data from production databases, file servers and email servers, organizations can improve the performance of their production applications, optimize infrastructure and reduce the high cost of production infrastructure because the data footprint is so much smaller.

Archives also enable application retirement and decommissioning of legacy systems that are no longer in use. Significant cost savings may be realized, and improved data compliance is achieved by rationalizing application portfolios and retiring outdated applications and data consistent with ILM and compliance policies.

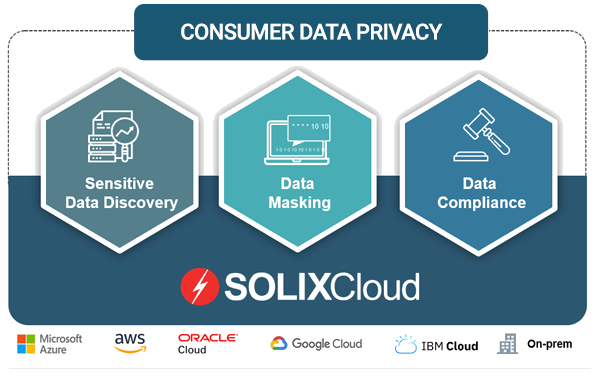

Consumer Data Privacy

Consumer data privacy regulations are emerging worldwide, sparked by the broad adoption of the EU’s GDPR initiative. New privacy laws and regulations are now enacted by over 100 countries and states, and significant fines have been levied for non-compliance. Consumer data privacy applications establish “privacy by design” and equip data controllers with critical tools such as sensitive data discovery, data masking, legal hold, redaction and erasure in order to comply with regulations governing personally identifiable information (PII).

The Conclusion

The data fabric is strategic to digital transformation, but moving large amounts of data from source to target also introduces a full stack of complexity. Proper planning over scope, objectives and approach eliminates most problems, but advanced SQL processing, machine learning and artificial intelligence consume large amounts of CPU power and network bandwidth, and when the data is not transformed or prepared properly, it may not produce the desired results. So plan carefully, and make sure your team is skilled in cloud native software, NOSQL, dev ops and the relevant cloud provider console.

Digital transformation requires a new information architecture called the data fabric to support data-driven applications, and cloud data management is the essential application framework that provides tools and use cases to collect, store and prepare data pipelines. Get started now and propel your business as a data-driven enterprise.

Analyst Recognitions

-

Forrester Wave™: Data Fabric (2024)

Solix Technologies Recognized In The Forrester Wave™: Enterprise Data Fabric, Q1 2024

Why SOLIXCloud?

SOLIXCloud Enterprise Archiving delivers a fully managed low-cost, scalable, elastic, secure, and compliant data management solution for all enterprise data.

-

Single Platform

Unified archive for structured, unstructured and semi-structured data.

-

Reduce Risk

Policy driven archiving and data retention

-

24/7 Support

Solix offers world-class support from experts 24/7 to meet your data management needs.

-

On-demand Scale

Elastic offering to scale storage and support with your project

-

Fully Managed

Software as-a-service offering

-

Secure & Compliant

Comprehensive Data Governance

-

Low Cost

Pay-as-you-go monthly subscription so you only purchase what you need.

-

End-User Friendly

End-user data access with flexibility for format options.

Resources

-

White Paper

The Solix Data Fabric: A Multi-cloud Information Architecture

-

White Paper

Data Fabric and the Future of Data Management

-

White Paper

Guide to Digital Transformation: Cloud Data Management

Need Guidance?

Talk to Our Experts

No Obligation Whatsoever